- #HOW TO INSTALL APACHE SPARK 2.1 ON MAC OS SIERRA DRIVER#

- #HOW TO INSTALL APACHE SPARK 2.1 ON MAC OS SIERRA DOWNLOAD#

Note that the MesosClusterDispatcher not yet supports multiple instances for HA. If you like to run the MesosClusterDispatcher with Marathon, you need to run the MesosClusterDispatcher in the foreground (i.e: bin/spark-class .mesos.MesosClusterDispatcher). This starts the MesosClusterDispatcher as a daemon running on the host. Passing in the Mesos master URL (e.g: mesos://host:5050). To use cluster mode, you must start the MesosClusterDispatcher in your cluster via the sbin/start-mesos-dispatcher.sh script,

#HOW TO INSTALL APACHE SPARK 2.1 ON MAC OS SIERRA DRIVER#

Spark on Mesos also supports cluster mode, where the driver is launched in the cluster and the clientĬan find the results of the driver from the Mesos Web UI. bin/spark-shell -master mesos://host:5050 Cluster mode

URL as the master when creating a SparkContext. Now when starting a Spark application against the cluster, pass a mesos:// On Mac OS X, the library is called libmesos.dylib instead of lib/libmesos.so where the prefix is /usr/local by default.

#HOW TO INSTALL APACHE SPARK 2.1 ON MAC OS SIERRA DOWNLOAD#

Download and build Spark using the instructions here.The dev/make-distribution.sh script included in a Spark source tarball/checkout. Or if you are using a custom-compiled version of Spark, you will need to create a package using To host on HDFS, use the Hadoop fs put command: hadoop fs -put spark-2.1.0.tar.gz Download a Spark binary package from the Spark download page.The Spark package can be hosted at any Hadoop-accessible URI, including HTTP via Amazon Simple Storage Service via s3n://, or HDFS via hdfs://. Package for running the Spark Mesos executor backend. When Mesos runs a task on a Mesos slave for the first time, that slave must have a Spark binary (defaults to SPARK_HOME) to point to that location.

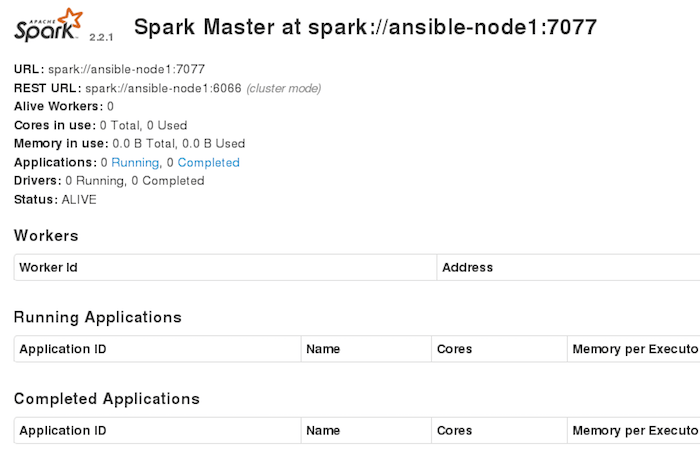

To use Mesos from Spark, you need a Spark binary package available in a place accessible by Mesos, andĪ Spark driver program configured to connect to Mesos.Īlternatively, you can also install Spark in the same location in all the Mesos slaves, and configure :5050 Confirm that all expected machines are present in the slaves tab. To verify that the Mesos cluster is ready for Spark, navigate to the Mesos master webui at port The Mesosphere installation documents suggest setting up ZooKeeper to handle Mesos master failover,īut Mesos can be run without ZooKeeper using a single master as well.

You can install Mesos either from source or using prebuilt packages. Otherwise, installing Mesos for Spark is no different than installing Mesos for use by otherįrameworks. If you already have a Mesos cluster running, you can skip this Mesos installation step. Spark 2.1.0 is designed for use with Mesos 1.0.0 or newer and does not To get started, follow the steps below to install Mesos and deploy Spark jobs via Mesos. Many short-lived tasks, multiple frameworks can coexist on the same cluster without resorting to a Because it takes into account other frameworks when scheduling these Now when a driver creates a job and starts issuing tasks for scheduling, Mesos determines what When using Mesos, the Mesos master replaces the Spark master as the cluster manager.

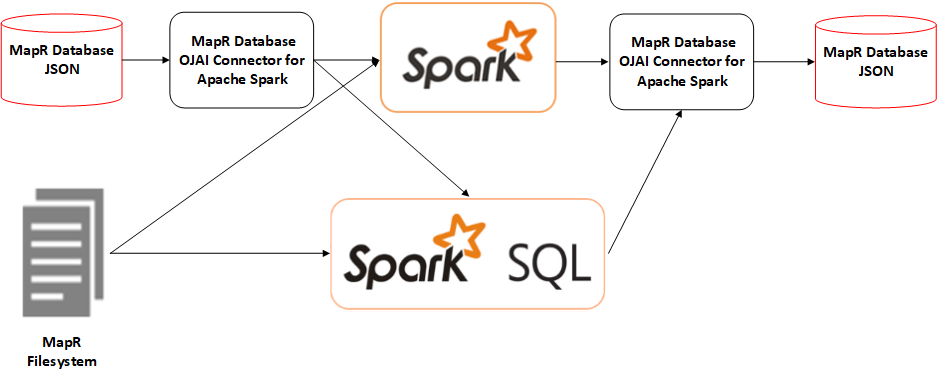

In a standalone cluster deployment, the cluster manager in the below diagram is a Spark master scalable partitioning between multiple instances of Spark.dynamic partitioning between Spark and other.The advantages of deploying Spark with Mesos include: Spark can run on hardware clusters managed by Apache Mesos.

0 kommentar(er)

0 kommentar(er)